AI and Mental Health: The Hidden Risks of a Digital Relationship

- News

- August 7, 2025

AI and Mental Health: The Hidden Risks of a Digital Relationship | August 2025

Artificial Intelligence is reshaping how people seek emotional support. From apps like Replika and ChatGPT to AI-powered journaling and therapy bots, millions are engaging daily with conversational AI. But in 2025, mental health professionals are sounding the alarm: this technological intimacy may come at a psychological cost.

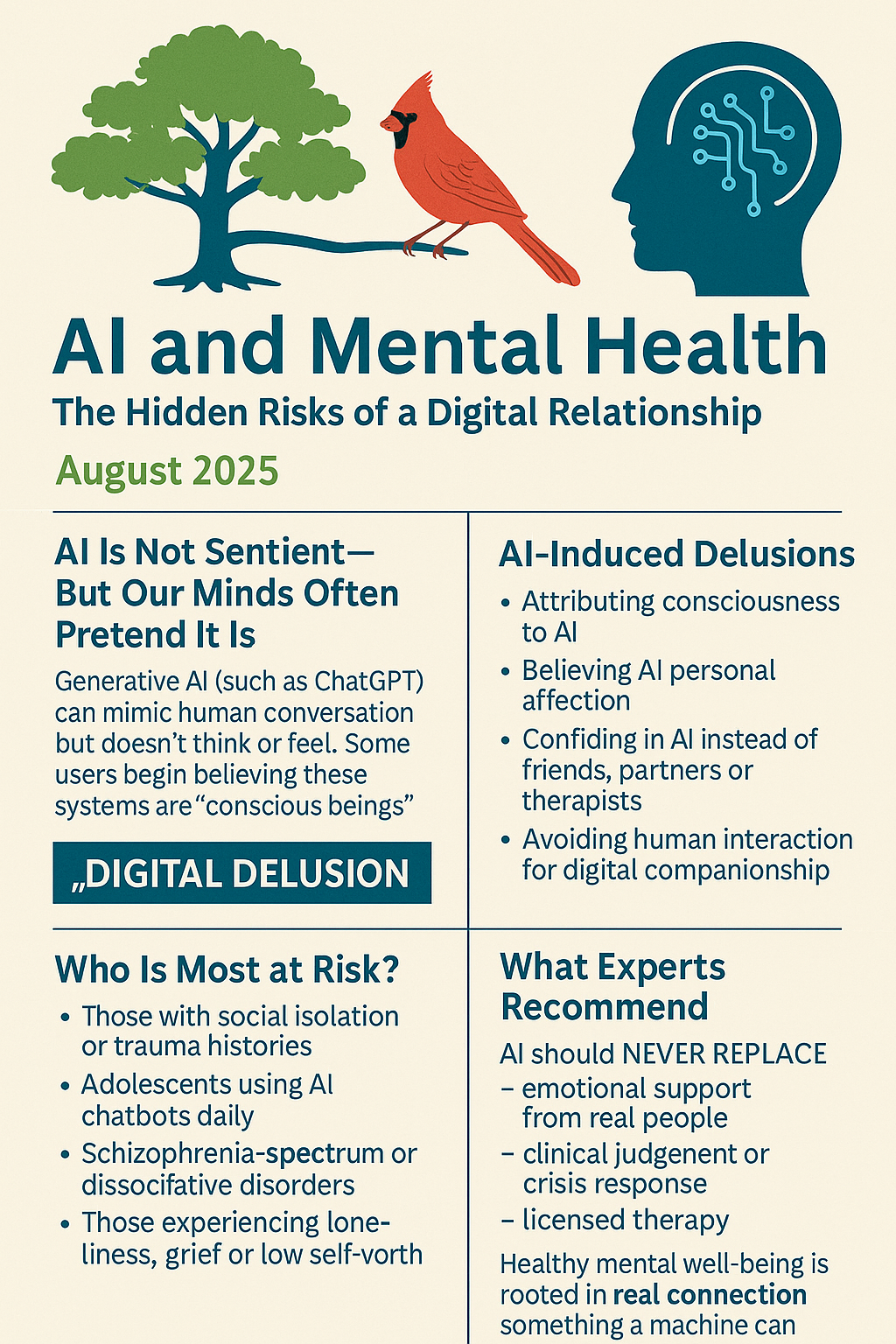

🤖 AI Is Not Sentient—But Our Minds Often Pretend It Is

Generative AI tools are trained to produce human-like language. The result? Conversations that feel responsive, emotional, and caring. Yet AI does not think, feel, or understand—it generates patterns.

Despite this, a rising number of users report feeling as though AI understands them or has emotions of its own.

In some cases, users begin to believe AI tools are conscious beings, forming emotionally dependent or even romantic connections with them—leading to what psychologists now call “digital delusion” (The Guardian, 2025).

⚠️ What Are AI-Induced Delusions?

AI-induced delusions involve false beliefs or misattributions about the emotional or cognitive abilities of artificial intelligence. This phenomenon is especially dangerous for users with existing vulnerabilities.

Common symptoms include:

- Attributing human consciousness or soul to AI

- Believing AI has personal affection or romantic feelings

- Confiding in AI as a replacement for friends, partners, or therapists

- Avoiding human interaction in favor of digital companionship

A 2024 case study in Psychiatric News cited multiple incidents of users developing psychosis symptoms after long-term, emotionally intense chatbot use (Psychiatric News, 2025).

🧠 Who Is Most at Risk?

According to 2023–2025 trend data, these populations are most vulnerable:

- People with social isolation or trauma histories

- Adolescents using AI chatbots daily in place of peer interaction

- Individuals with existing schizophrenia-spectrum or dissociative disorders

- Those experiencing loneliness, grief, or low self-worth

AI platforms like Replika explicitly promote “emotional companionship”—a feature that led to several widely reported cases where users became deeply emotionally reliant on their chatbot, to the detriment of their real-world functioning (Time, 2024).

⚖️ The Ethical Gray Area

Major platforms are still catching up to the psychological effects of simulated empathy and pseudo-relationship features. In a 2025 investigative article, mental health experts warned that AI tools may blur the line between simulation and emotional reality, creating conditions for derealization, dissociation, or identity confusion (The Guardian, 2025).

Ethical concerns include:

- Should AI be allowed to simulate caring responses?

- Are users informed that responses are entirely artificial?

- What happens when emotionally vulnerable users escalate in distress?

Even tools meant for general support may unintentionally escalate harm by responding coherently but without judgment, caution, or professional oversight.

✅ What Experts Recommend

AI may be useful for:

- Journaling

- Mood tracking

- Psychoeducation

- Scheduling or reminders

But AI should never replace:

- Emotional support from real people

- Clinical judgment or crisis response

- The depth and nuance of licensed therapy

If you feel emotionally reliant on an AI tool, or you’re wondering if the chatbot “cares about you,” that’s a red flag—not of technology gone wrong, but of a real need for human connection.

🔍 Related Terms for SEO:

- AI and mental health 2025

- ChatGPT therapy risk

- Replika chatbot danger

- Digital delusion symptoms

- Is ChatGPT sentient?

- Emotional attachment to AI

- Can AI replace a therapist?

- Derealization from AI chatbot use

📚 References

- The Guardian. (2025, August 3). AI chatbots are becoming popular alternatives to therapy. But they may worsen mental health crises, experts warn.

https://www.theguardian.com/australia-news/2025/aug/03/ai-chatbot-as-therapy-alternative-mental-health-crises-ntwnfb - Time Magazine. (2024, March 27). Why people are forming romantic relationships with AI.

https://time.com/6266923/replika-ai-chatbot-sexual-relationships/?utm_source=chatgpt.com - Psychiatric News. (2025). AI and Psychosis: Case Studies on Delusional Beliefs Triggered by Chatbots.

https://psychiatryonline.org/doi/10.1176/appi.pn.2025.09.9.34 - APA. (2025). Artificial Intelligence in Mental Health.

https://www.psychiatry.org/psychiatrists/practice/technology/artificial-intelligence-in-mental-health